Action Items

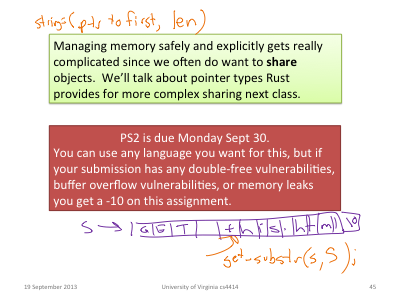

PS2 is due Monday, September 30. It is significantly more challenging than PS1, and you should have already made significant progress on it!

Building a Better Broswer

|

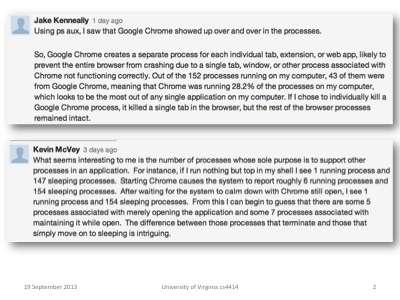

Two of the most interesting comments posted in the class discussion forum for the PS2 exercises about exploring processes concern the seemingly ridiculously high number of processes Chrome has created on your machine. |

|

Should a browser be creating 43 or 153 processes on your machine? The way engineers should answer questions like this is to think about tradeoffs: do the benefits outweigh the costs? For most problems in computing, the answer changes about every 10 years. (Why every 10 years? If computing power increases according to Moore's Observation, 10 years is enough time for a 50x improvement. It would be really surprising if the relative costs and benefits of things don't change dramatically when the underlying technology cost changes by 50x.) |

|

In the 1990s, memory was very expensive (relative to today), and unnecessary processes waste lots of memory. Using one process for the browser was the right answer. |

|

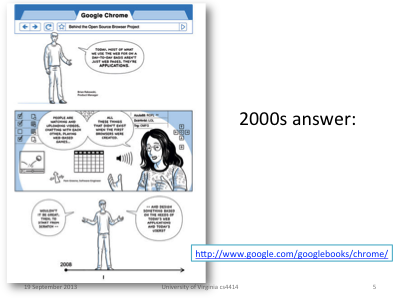

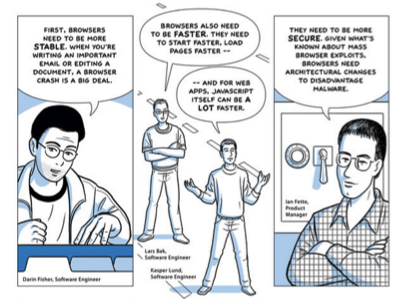

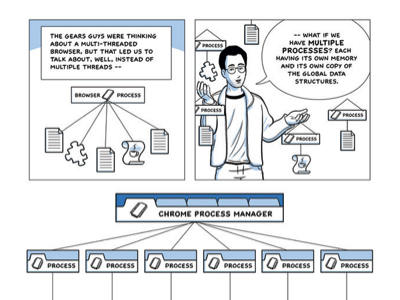

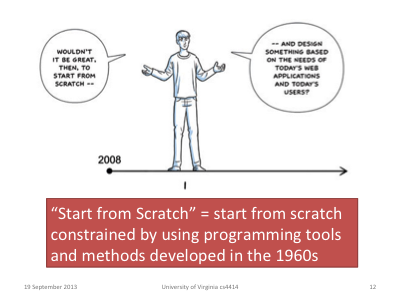

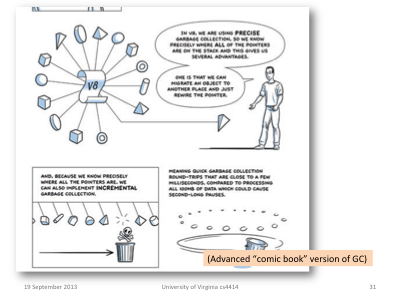

By the 2000s, memory was getting cheap enough that it was worth using lots of memory to build a more robust and secure browser. When Google introduced the Chrome browser in 2008, they did it using a really fantastic comic book! We don't have a required textbook for this class, but if I was required to have one, this would be it - it covers essentially all of the topics we will cover in this class, and has the added benefits of being free and having funny pictures. |

|

|

|

If one process per tab was the correct answer in 2008, surely the answer should have changed by now? |

|

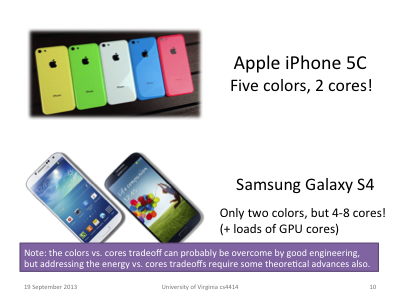

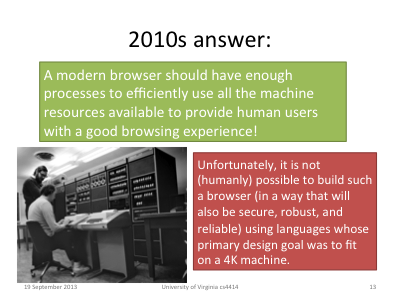

Here's one way things have changed: even your mobile phone today has many cores. Today, you have to make a tough tradeoff between number of cores and number of colors, but engineers at Samsung and Apple are working hard to overcome that challenge. The more fundamental challenge is the energy used by more cores will drain your battery more quickly. This means many-core mobile devices need smart power management, to power-down cores when they are not providing much benefit. |

|

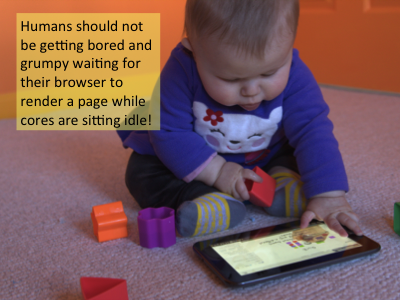

But humans shouldn't waste their precious time and get grumpy waiting for JavaScript programs to finish running in their web browser while useful computing resources sitting idle because your browser is too stupid to be able to use more than one process for the active page! |

|

There's one big problem: browsers are big, complicated programs and changing how they use processes is not something to be done lightly. |

|

Its even worse (and effectively impossible) if you are using programming tools that dropped the useful features of pre-1960s languages in order to fit into the limited memory of machines available to researchers at Bell Labs in the late 1960s. |

|

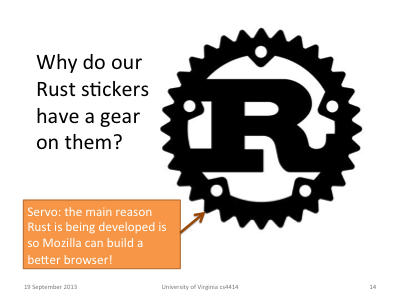

If you want to robusly and securely divide the work of rendering a web page across multiple cores, you need programming tools that make safe concurrency (relatively) easy. This is the main reason Rust is being developed: Mozilla wants to build a better browser that can use modern computers more efficiently, but didn't think this was possible using C++. |

|

Its called Servo, hence the gears around the Rust logo. Servo is not yet to the point where it has its own logo, but it did recently pass a test to be able to render a fairly complex web page correctly. For more: Samsung teams up with Mozilla to build browser engine for multicore machines, arstechnica, 3 April 2013. |

Memory Management

|

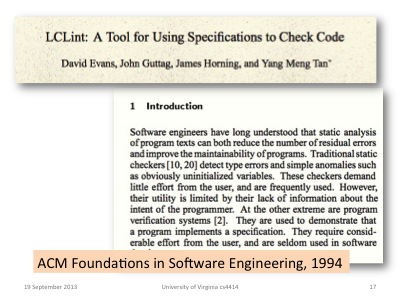

Back when most of the students in this class were learning to crawl, I wrote my first computer science paper (I wasn't a quick starter like my daughter who already has two publications). The full paper is here (but I don't recommend reading it now). |

|

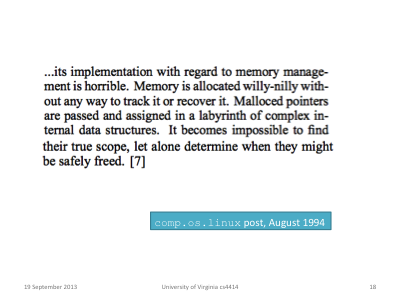

The tool I worked on was used enough for people to start making publicly embarrassing complaints about how bad my code was. (You can download it from http://www.splint.org. Enough people still use it for me to get a few bug reports most weeks. I guess one would need to figure out how buggy my code is, and how likely people are to send in bug reports when they encounter one, to estimate how many people are using it from this, but its sufficient evidence to know it is more than zero which is usually high for an academic research project!) |

|

Why was my memory management so "willy-nilly"? |

|

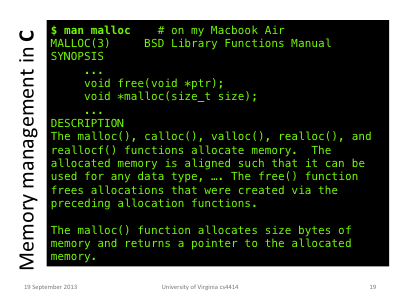

|

|

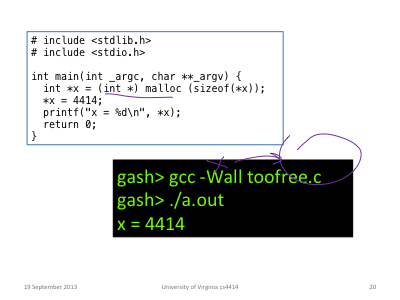

This is almost a "correct" C program (it fails to free allocated memory, but it will all be reclaimed by the OS after the process exits). |

|

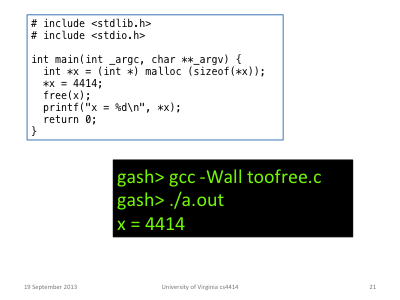

The compiler doesn't have any problems with this one, and on my MacBook Air, it produces the "desired" output. But, its actual behavior is undefined, and with different C compilers and runtimes it could do anything. |

|

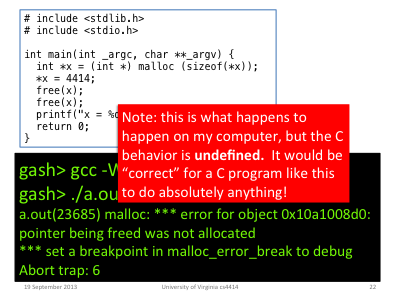

With a second free, it crashes with a helpful error message. This is a big improvement over what would happen on most machines a few years ago! |

|

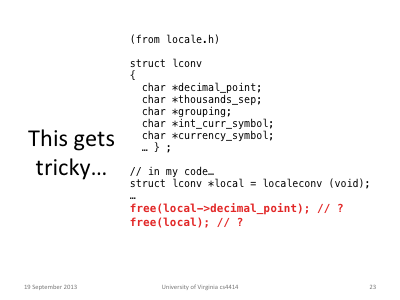

For this example, its pretty obvious where the frees are needed. But in any non-trivial code, it is extremely hard to get it right, and for complex code it is nigh-impossible (without help from sophisticated tools, and then it becomes merely ridiculously hard). |

|

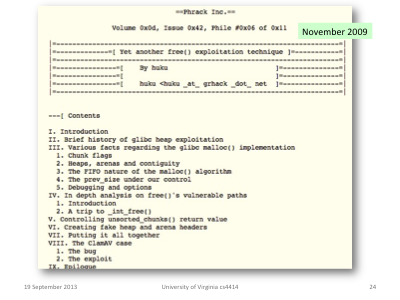

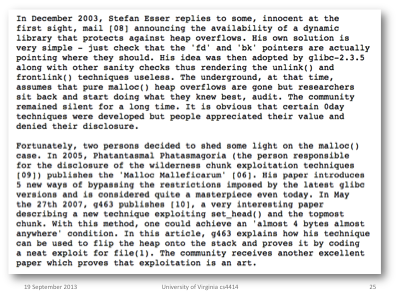

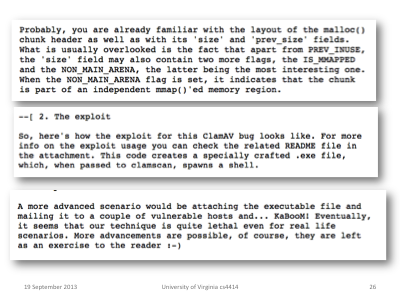

Should we care if our programs occasionally crash? Yes! Really, really, bad things can happen as a result, if an adversary is clever enough and your code is running on an accessible server. For an example, see Yet another free() exploitation technique by huku from Phrack, November 2009. |

|

|

|

Hacker magazine articles are usually more technically and scientifically sound than academic papers, but less well written. I'm not sure whether huku deserves credit for recognizing the possibility of female hackers, or shame for the way he worded it and using a ;-), but unfortunately you will see worse (in both hacker and academic papers). If you think using sexist language is okay, you should read Douglas R. Hofstadter's A Person Paper on Purity in Language. (Although I've had long discussions with one of Doug's PhD students about whether or not "guys" is a reasonable term for referring inclusively to all people, and am not sure if there is a definitive answer to this, but its best to always err on the side of linguistic inclusiveness.) |

|

C++ has more tasteful names, but otherwise only makes things worse by adding complexity. |

|

Java solves the problem by providing automatic memory management in the run-time system. |

|

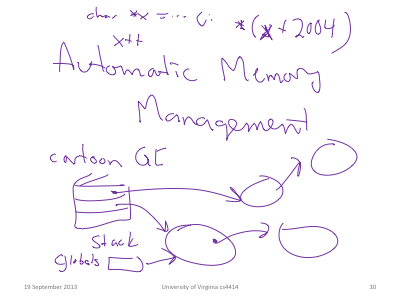

The cartoon version of automatic memory management is to find all the reachable objects by stopping the program and crawling everything you can reach starting from the stack. This is very expensive and means programs may pause noticably even to human users while particularly expensive garbage collection is done. |

|

For a slightly less cartoonish explanation of how garbage collection is done by sophisticated runtimes today, see the Chrome comic book. |

|

This quote is from this paper (which unlike the 1994 one, I do recommend reading if you have time): Static Detection of Dynamic Memory Errors, PLDI 1996. |

|

The way to keep the benefits of explicit memory management, but without the willy-nilly part, is to find ways to manage memory systematically. |

Systematic Memory Management

|

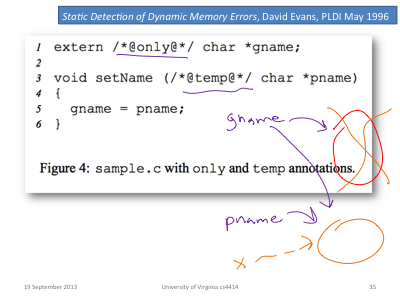

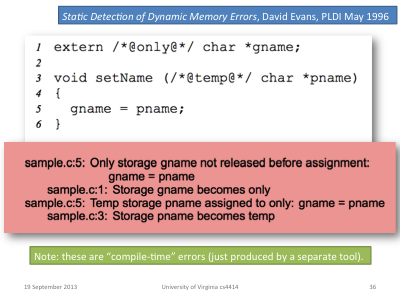

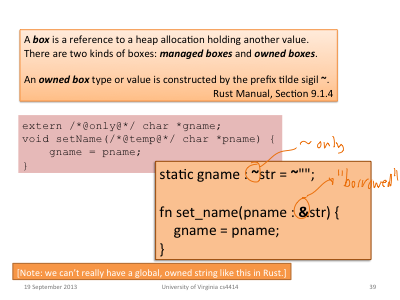

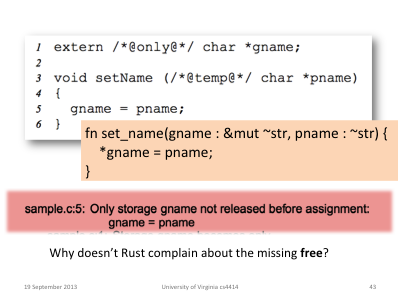

Here's an example from the 1996 paper: we can annotate a pointer reference as only, and it means that reference is the only reference to the storage it points to. This implies rules about what you can, cannot, and must always do when using this reference. |

|

|

|

My tool was constrained by maintaining backwards compatibility with C, so all hints about memory management had to be done using stylized comments. But, if you are building a new language from scratch like Rust, there are many better ways to do things. |

|

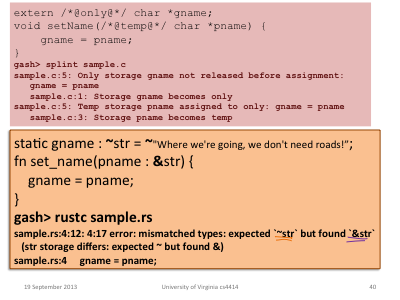

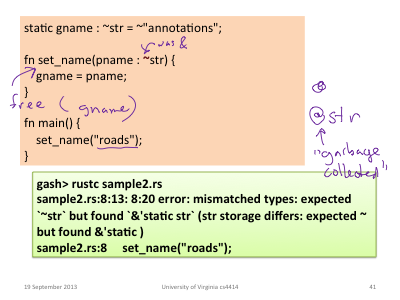

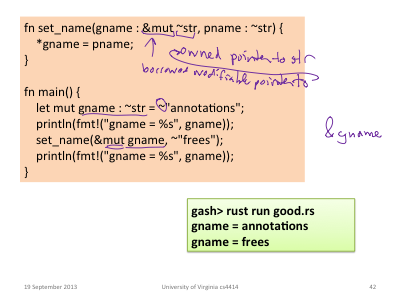

~ indicates an owner reference; & indicates a borrowed (temporary) reference. |

|

Rust reports misuses of memory as type errors at compile time. |

|

|

|

|

|

If a lowly grad student in 1996 was able to write a tool that could warn programmers when they forgot a needed free, surely a modern compiler developed by a team of smart people can figure out how to put in the frees automatically! |

|

Real programs need to share memory in much more complex ways than just owned and borrowed references. Rust offers several different ways to enable more complex sharing (including the option to have automatically managed memory). We'll talk more about these next class. |

|

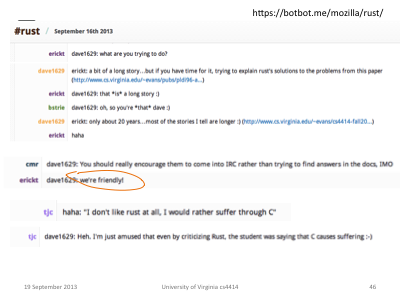

If you run into difficulties with the Rust pointer types, the friendly folks on the Rust IRC channel can probably help you out! You should seek help before suffering unnecessarily. |